The Challenge

A little while back, our VP of Product came to us with an interesting problem. We had been hosting some servers in a private collocated data center, and with those servers, came a healthy chunk of included bandwidth that we used to host our release server for the GitKraken Git Client. The release server handles all downloads of the client from our website, and also serves all the updates that our customers receive.

However, as we were moving more of our things into public clouds (AWS/Azure) and decommissioning servers at the private data center, the chunk of included bandwidth shrank and was no longer sufficient for our needs. Paying for overage charges or extra bandwidth was, and still is, cost prohibitive.

Finding a Solution

We needed a solution for distributing our downloads and updates that would fit the following criteria:

- Fast

- Cheap

- No Downtime (and works with existing clients)

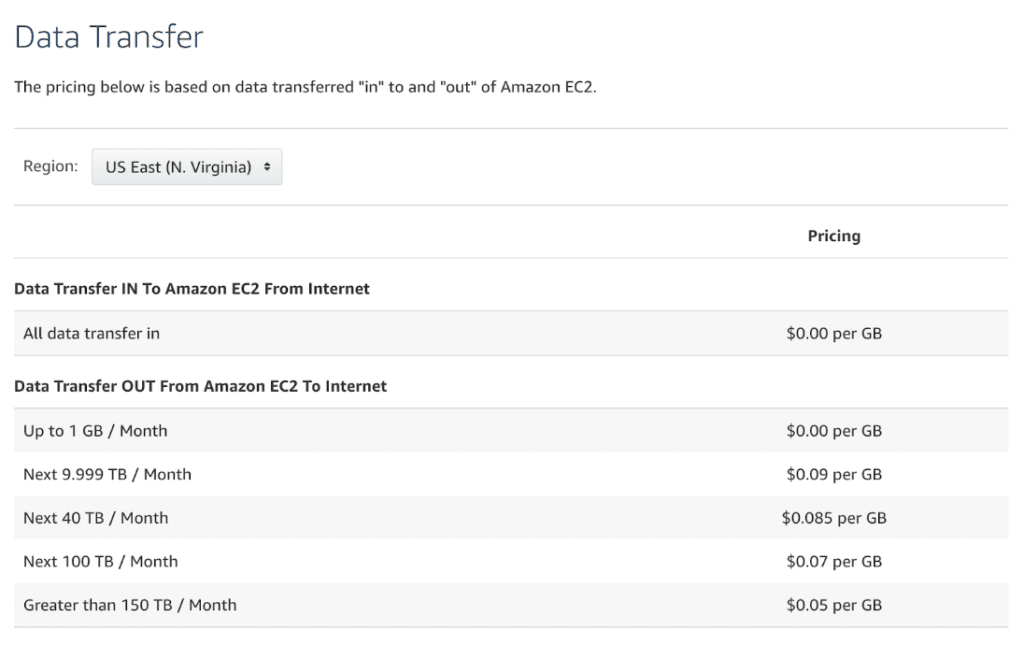

Bandwidth is Expensive

GitKraken is no Facebook, but it isn’t tiny either. In fact, we recently broke the one-million-user mark! Our little-release-server-that-could consistently served multiple Terabytes of GitKraken downloads/updates every single day. Serving that exclusively through AWS would stick us with a bill just for bandwidth that would be way higher than we were comfortable paying. For reference, 150 TB/month costs over $11,500 USD.

Don’t Pay For Bandwidth

Since bandwidth is expensive and we are trying to keep costs low, we figured the best thing to do is eliminate bandwidth costs. Cloudflare doesn’t charge for bandwidth; regardless of the volume, you don’t pay extra for it. Additionally, since Cloudflare’s CDN has Points of Presence (POPs) all over the world, our release files could be served to customers even faster.

Avoid Downtime

Because we like our Customer Success team and wanted to avoid tickets about failed downloads, avoiding downtime was extremely important for this transition. We also needed to do all of this without editing GitKraken’s code. Since our customers already have the client and the client knows how and where to get its updates, this change had to be completely unknown to the GitKraken Git Client already downloaded on our customers’ computers.

Step 1: Setup a new domain name

When you use Cloudflare, it actually becomes the DNS provider. For a variety of reasons, we were not prepared to do this for the entire gitkraken.com domain. Instead, we decided to create the axocdn.com domain and host that domain on Cloudflare. This would allow us to move quickly and make the changes we needed to without impacting the remainder of our DNS and infrastructure.

Step 2: Create the AWS Infrastructure

Utilizing CloudFormation, we created the requisite infrastructure running our release server code.

Step 3: Seed the new release server with the release files

Copy the release files onto the new release server so that it actually has the updates to serve to clients.

Step 4: Reduce the DNS TTL for release.gitkraken.com

We wanted to prepare for the worst-case scenarios. If something went wrong and we wanted to roll back or swap to the new server, we didn’t want to have to wait 24+ hours for our DNS change to propagate down to all the clients.

Step 5: Flip the DNS

We changed the DNS entry for release.gitkraken.com to point to the newly created release server in AWS so that traffic would begin to flow into it. Utilizing Nginx, we redirected all traffic coming to the new release server from release.gitkraken.com back to release.axocdn.com. Because axocdn.com is hosted behind Cloudflare, any users now connecting to the release server would be hitting the Cloudflare route, which caches our downloads.

Step 6: Monitor performance and bandwidth

While the tests we were running indicated everything was working, we wanted to continue to monitor to ensure that everything was running smoothly. The first few hours that we made the change, a large percentage of the requests were making it to our origin server, which made us a little bit concerned. But as Cloudflare’s POPs filled with our download artifacts, the traffic coming to our origin server shrank dramatically.

The Results

Cloudflare’s CDN reduced bandwidth to our release server by 100%.

Well technically not all the traffic, but it was so close to 100% that Cloudflare’s analytics dashboard rounded it up. In reality, it was about 99.5% of the bandwidth that was cached by Cloudflare’s CDN.

We will continue to monitor our new setup to ensure it behaves as expected, but apart from new update files having to propagate out to the POPs as we create new releases, we expect traffic to remain at a fairly consistent level.

GitKraken MCP

GitKraken MCP GitKraken Insights

GitKraken Insights Dev Team Automations

Dev Team Automations AI & Security Controls

AI & Security Controls