Do you want the map to DevOps success, without the risk of anyone swiping results from you? Then you’re in the right place. Let’s explore DORA, but not the Nickelodeon character, and specifically DORA metrics.

DORA is the DevOps Research and Assessment group. Founded by Dr. Nicole Forsgren and Gene Kim, it was started to conduct academic-style research on DevOps and how organizations were implementing it throughout their software delivery organizations. The goal was to try and understand what makes for a great DevOps transformation.

Many in the industry are still struggling to understand how Dev and Ops fit together, and what it means for agile, or the future of software in general.

Now, let’s go back to the beginning when DevOps was in its infancy. A developer walks past a server room with the doors propped open. That’s never a good sign. They see a lead developer on a new product the company is working to bring to market, and the lead developer has a screwdriver stuck into the server, working to get a hard drive out. That server was used to track all of the work they were doing and the plans for the product. The hard drive had failed and the developer didn’t know what to work on next, so instead of coding, they were trying to fix the server.

This is an example of what economists like to call “Opportunity Costs.” The team in this situation was working on getting a new product to market—a modernization of the existing product line—to be the first to bring tablet-based questionnaires to market in their limited vertical. And here was the lead developer for that project, really the architect, with a screwdriver in a server.

This team needs the right DevOps tools, ones they didn’t have to stick screwdrivers into, so they could get back to spending their time doing engineering work for their customers.

If you’re looking for a tool to streamline your team’s collaboration and workflow, GitKraken’s Git client allows your team to take advantage of the true powers of Git, whether your devs prefer a GUI or CLI.

Software Has Eaten the World

Famously, venture capitalist Mark Andreessen was quoted as saying “software is eating the world.” Considering we live in a time where you order your actual food from an app and then document it by taking a picture of it with another app, we can safely say that software has, in fact, “eaten the world.”

And in this world, the businesses that are able to survive and thrive are those that can quickly adapt to changes in their markets. The way that businesses adapt quickly today is through software. That’s why, more recently, Andreessen was quoted as saying: “Cycle time, that is the time from idea to production or concept to cash, is the most underestimated force in determining winners and losers in tech.” And those winners and losers in tech will determine winners and losers in every industry. Every company is a software company. Their ability to ship better software faster is the thing that will determine if they win or lose in their respective markets.

Go Fast and Break Things: Stability vs Speed

You might be thinking “you can’t just go fast and break things.” To some extent, that’s right. Not only do you have to be concerned about speed, about how fast you can deliver products to market, and how quickly you can adapt those products to match changing market conditions, but you also have to think about the stability of your product and your platforms. Customers will only stay your customers if you’re able to provide them with a stable and reliable product. One that they can count on to be there. One that is secure and safe.

Oftentimes in the technology world, you see these two forces as out of sync. Intuition or preconceptions of what it means to deliver secure and stable products make us think things like: “oh well you know the way to keep a system stable is to slow down” or “more changes will only serve to disable destabilize any systems.” So, you resist the urge to move faster for the sake of stability.

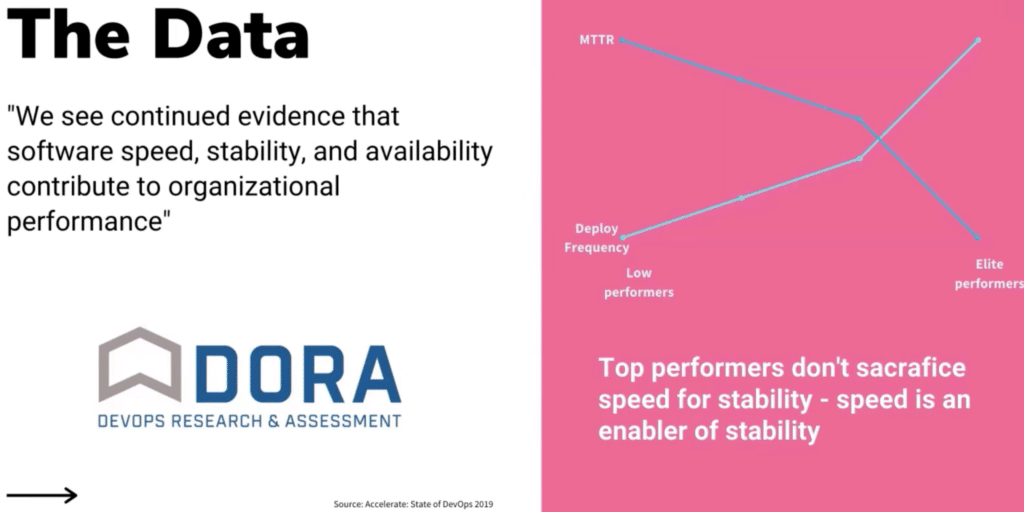

That is completely incorrect. This is one of those cases in life where you can have your cake and eat it too. In fact, not only do the top performers in terms of software delivery operations excel in both speed and stability, there is actually a positive predictive relationship between speed and stability.

Enter DORA Metrics

This is where our friends at DORA come in. They’ve been doing research for over seven years, applying complex statistical analysis to data collected from thousands of organizations to draw meaningful conclusions from said data and identify patterns. They do this to create models that can aid organizations of any size to know what to focus on to improve their own software delivery performance.

They’ve quite literally written the book on the subject. The founders of the DORA program, Dr. Nicole Forsgren, Jez Humble, and Gene Kim, wrote a book based on the aggregated findings of their years of research called “Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations.” This book is highly recommended for all software engineers, engineering managers, product managers, CTOs, CIOs, and CEOs. And really anyone building a business that has any reliance on software.

It’s a fantastic examination of the scientific data and the principles they applied against those data sets to inform the conclusions that are drawn from years of research.

Surprising and Critical Findings from DORA Research

First and perhaps the most surprising is the one that completely debunks the idea of having to make the trade-off for speed versus stability. In the research, they found that high-performing teams, which they call “elite performers,” actually are significantly faster at deploying code. They deployed much more frequently than the low performers.

At the same time, the “mean time to recovery” of their services was significantly less for those elite performers. This refers to the time needed to implement a fix when a production-impacting incident occurs. This holds true even when controlling for other variables, like company size, industry, or other information.

It’s not just that the data shows that some high technology companies are able to move quickly and have more stable systems; it actually shows this happens across industries. You can see these same patterns exist in retail, healthcare, manufacturing, government, and financial services.

What exactly are these elite performers doing better than their lower-performing counterparts? What are the measurements that drive performance? What are the important factors that engineers and leaders should be focused on to improve their performance well?

Luckily DORA has been able to answer these questions as well. They found four key measurements that both correlate to higher performance and have predictive relationships with software delivery performance and overall organizational performance. Focusing on the capabilities that enable these key points allows organizations to measure and improve these DORA metrics.

These metrics are directly attributable to how the organization performs overall, in terms of revenue or other organizational goals, as well as human factors like job satisfaction. Improvements in these categories can be driven by all of these DORA metrics, so this is really a groundbreaking discovery, supported by both intuition and data.

The Four Key DORA Metrics

The 4 DORA metrics are:

- Lead Time for Changes

- Deployment Frequency

- Failure Rate

- Mean Time to Restore Services

Consider the effectiveness and efficiency of the software development process. The first two metrics listed above are really speaking to speed, while the last two speak to stability. These DORA metrics get at the software deployment processes and their effectiveness in achieving those stability goals for organizations.

Lead Time for Changes

Lead time for changes seems like a simple metric when you think about it. You likely know how long it takes for a change to make it through the whole process. But measuring that with scientific accuracy requires a far more specific definition.

In manufacturing, lead time refers to the time between a customer making a request and the time that that request is satisfied. In software development, it’s a little bit more complicated, due to the fact that there’s design and product engineering time that goes into fulfilling some, if not all, requests. The other end can have multiple definitions too. What if ‘in production’ means waiting for an app store to publish the new version, or even waiting on customers to update the software?

To focus on software delivery performance, lead time for changes specifically refers to the time it takes from code being committed to being in a production-like environment. Or more specifically, that the code is released and ready for production, even if that means there’s additional time spent after that point, but before the customer actually sees the change. That is time that the software and DevOps teams don’t have control over.

Consider this: let’s say you have one line of code to change. How long does it take for that line to get into production? What is every step that line of code has to go through to get there? On the surface, that seems like a pretty simple question, but thinking through this can actually lead to extremely fruitful discussions about the overall bottlenecks of getting code to production.

Deployment Frequency

The other DORA metric that sets teams apart when it comes to delivering better software faster is deployment frequency. How often are you deploying code to production? At first, it may seem counterintuitive that deploying code more often, literally changing things more often, can actually have a positive correlation to system stability. Intuition says making sure changes to production are slow and infrequent will make the system better in the end, or at least more stable.

But in practice, that’s just not true! And there are a lot of factors discussed in the DORA reports and the previously mentioned DevOps book that go into detail about this. Think about deploying to production like a muscle. The more you work out that muscle and build muscle memory, the stronger and better it becomes. If you find that your team dreads releases or deploy days or you’re scared to deploy on Fridays, the answer might not be to deploy less, but to deploy more!

The top performers are deploying multiple times per day. They’ve built up that muscle so that deploying on Friday is no worse than any other day. The system they’ve built has resilience and reliability because it has had many at-bats with deployments and testing.

Change Failure Rate

Speaking of deploying without worrying, let’s talk about how often you create a problem in production when deploying or changing something. That is called the change failure rate.

Does your team have an empirical way to look at this today? Do you know what percentage of production changes cause an issue for your users? Today, many folks aren’t tracking this type of specific data, with most doing this calculation from anecdotal information at best.

Even if organizations are making a conscious effort to track this data, the change failure rate can be next to impossible to measure. It’s very hard to really trace the change back through the entire development lifecycle and identify what caused it. Thus, many changes end up in a situation like “hey let’s close this barn door after the horse already got out.”

But as with the other DORA metrics, measuring and focusing improvements on this rate shows direct predictive relationships between change failure rate and the overall software delivery and operational performance of our teams.

Mean Time to Recovery

The mean time to recovery, or MTTR, measures how fast fixes go into effect. The number of production incidents will never be zero, even for the largest technology organizations in the world that have the best distributed systems engineers. With that being said, elite performers and elite performing software organizations work with methods like Site Reliability Engineering (SRE) and Service Level Objectives (SLO), and have error budgets to ensure that when incidents do happen, the length of time they impact users is minimized as much as possible.

Visualizing DORA Metrics

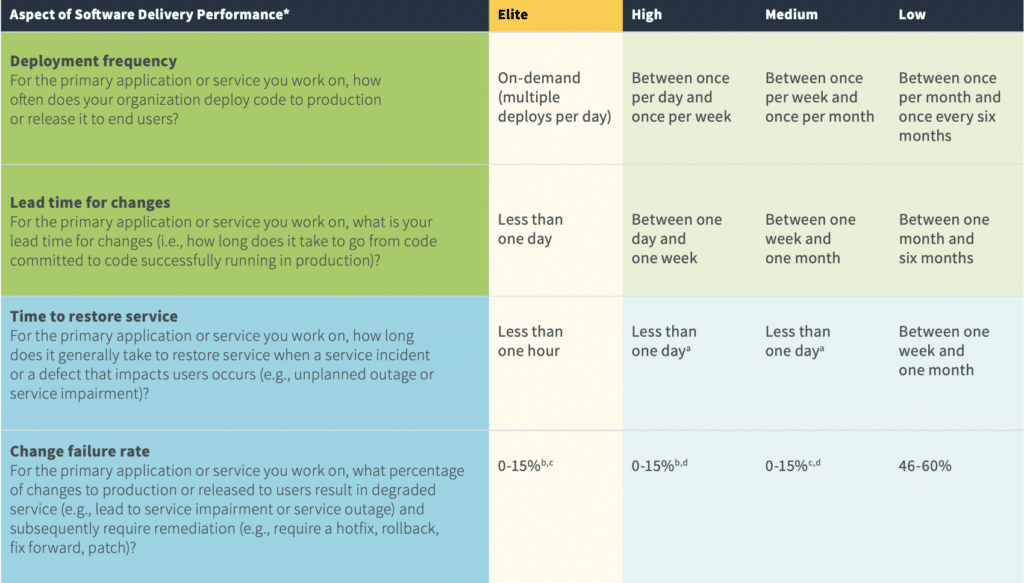

What do these DORA metrics really look like? How can you measure yourself against peers in the industry? DORA reports provide the ability to do exactly that.

The table below is from the 2019 state of DevOps report.

It is interesting to see the disparity that still exists looking at deploying multiple times a day versus once every six months. That has a huge impact on an organization’s ability to adjust to market conditions. Just think about how quickly things change these days. Those who are able to focus on where they are now on this chart and build measurements to improve will be able to out-compete their competition in new and innovative ways they haven’t even thought of yet. But there’s still a problem.

The problem is that measuring all these things can be extremely complicated. If you measure it, you can focus on improving it, but today’s tooling doesn’t always make it easy to have a clear understanding of even the simplest things. For example: when did code get committed? When did it get put into production? In a world of disconnected tools, doing independent jobs for the software development lifecycle process can be a black box. Code goes in one side, bounces around a bunch of teams and different tools, and then hopefully comes out the other side into production at some point.

If you have many different unintegrated systems, it can be really hard, if not impossible, to understand how to measure these types of DORA metrics. Or visualize them and then make them actionable for your team.

Making DORA Metrics Actionable

To make DORA metrics actionable, you have to be able to understand where time is wasted in the process. Often as engineers, you can see this easily, but only anecdotally. You might say “oh it takes a long time for security to pass a build” or “testing takes too long we haven’t had enough time to automate a lot of it yet.”

Turning these anecdotes into data that anyone at any level can see, from instrumentation and control engineers all the way to the CTO, is critical in building organization-wide buy-in to improving the places where time is really wasted

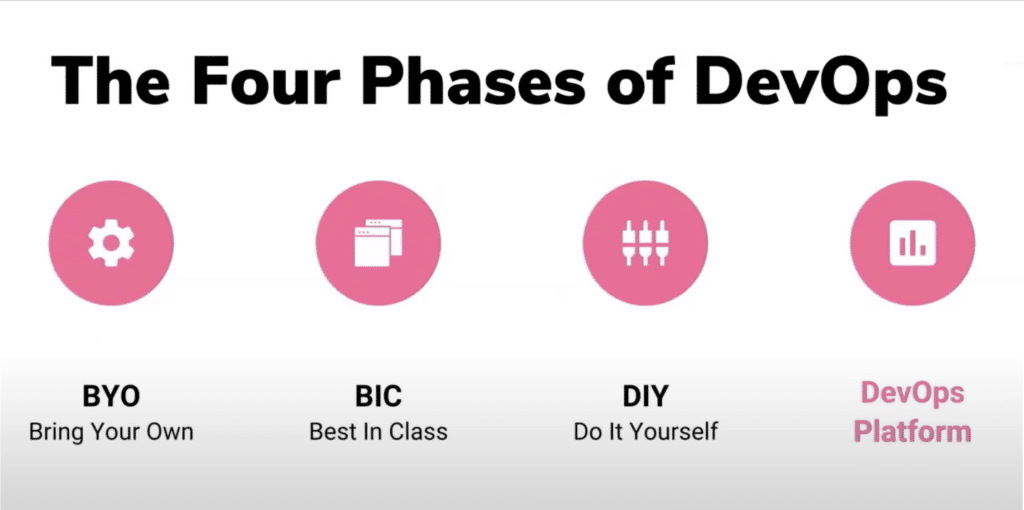

Just as the DORA team has seen elite performers adapting and improving over their years and years of research, we have also seen an evolution in the way teams think about their DevOps toolchains. DevOps is over 10 years old and has gone through a number of different phases.

The Four Phases of DevOps

The four phases of DevOps are:

- Bring Your Own (BYO)

- Best In Class (BIC)

- Do it Yourself (DIY)

- DevOps Platform

Bring Your Own DevOps

In the Bring Your Own (BYO) DevOps phase, each team selects their own tools when they come together to create a single product or application. This approach can cause problems when teams attempt to work together because they won’t be familiar with the tools of other teams, or may even lack access to the same tools and data.

Consider the case where the security testing is all done by one person. At the end of an eight-week development cycle, having a single reviewer will often lead to hundreds of new security bugs that had to be carefully examined and fixed. All the while, the engineers have no access to that data before release time. This leads to frustrations all around.

Best in Class DevOps

To address these problems of disconnected tools and data, many organizations move to the second phase: Best In Class DevOps. In this phase, organizations standardize on the same set of tools for all teams, with one preferred tool for each stage of the DevOps lifecycle. Sometimes, these will be tools that allow for some level of integration, but often, it’s still a very diverse set of unattached tools.

These independent Best in Class tools can likely help team members collaborate with each other, but the problem then becomes how to best integrate and adapt the current workflows to leverage these tools. It very oftentimes falls on the individual teams to implement these tools themselves, leading to a lack of consistency across teams.

Do It Yourself DevOps

Do It Yourself (DIY) DevOps builds on top, and in between, all of the Best in Class tools. Teams in this phase perform a lot of custom work to integrate their DevOps point solutions together. However, since these tools are developed independently, they may never fit just right. The way different tools report and interact with multiple data points, like those that are part of DORA research, can vary greatly. Anyone that’s ever dealt with a large-scale data integration project can tell you what a huge struggle this is.

These DIY DevOps tools require significant effort for upgrades and changes. Modification to each of these tools can easily break the brittle integration points or even threaten to make the data incompatible with current data sets. All of this effort actually results in a higher cost of ownership when compared to just sticking with Best In Class. Worse yet, with engineers spending time maintaining tooling integration rather than working on their core product, you’re back to screwdrivers and servers.

GitKraken was built with both team collaboration and developer experience in mind, prioritizing an identical experience across operating systems for our Git client, making it easy to adopt streamline processes across your teams.

A DevOps Platform Approach

A platform approach is needed to improve both the team experience and business efficiency. Many large organizations refer to their DIY tools as “platforms,” but they lack the integration of a single application, a single data plane, and a single place for user role management.

A DevOps platform is a single application, powered by a cohesive user experience, agnostic of being self-managed or SaaS deployed. It’s built on a single code base with a unified data store which allows organizations to resolve all these inefficiencies and vulnerabilities in DIY toolchains.

A DevOps platform approach allows organizations to replace their DIY DevOps. This allows for visibility throughout and control over all stages of the DevOps lifecycle. A company’s very business survival depends on its ability to ship software. To ship better software faster, you have to be able to control, measure, and understand how your teams are building that software.

Bringing teams together can’t just be a catchphrase. It has to be the reality of the operating model of our organizations. Without that, you’ll never understand and realize the real promise of DevOps.

In much more practical terms, this means moving teams to using the same tools to optimize for team productivity. This move improves cycle time for deployment frequency, MTTR, and reduces the change failure rate.

GitKraken MCP

GitKraken MCP GitKraken Insights

GitKraken Insights Dev Team Automations

Dev Team Automations AI & Security Controls

AI & Security Controls